Weekend Projekt - Test Cluster

Udstyr

- 5 stk. Lenovo ThinkCenter (C01, C04, C05, C06, C17)

- Cisco 2950

Setup

C01 bliver master server, med apt-cacher til installation og PXE netinstallation server. Den skal også have MRTG og nagios.

- C01 sidder i Gi0/2

- C04 sidder i Fa0/1

- C05 sidder i Fa0/2

- C06 sidder i Fa0/3

- C17 sidder i Fa0/4

2950

enable password cisco

!

interface range fa 0/1 - 24

switchport mode access

switchport access vlan 2

spanning-tree portfast

!

interface range gi 0/1 - 2

switchport mode access

switchport access vlan 2

spanning-tree portfast

!

interface Vlan2

ip address 10.1.2.50 255.255.255.0

!

snmp-server community public RO

!

line con 0

line vty 0 4

no login

line vty 5 15

no loginC01

Denne node skal være installtions og mangement node for clusteret.

Std applicationer

Installtion af det nødvendig samt noget random.

aptitude -y install apt-cacher tftpd-hpa tftp-hpa xinetd nagios3 mrtg nmap screen bmon iperf bonnie++ lmbench lm-sensors snmpd snmp build-essential gcc openssh-client nfs-kernel-server

Apt-cacher

Ændre i /etc/apt-cacher/apt-cacher.conf

path_map = ubuntu de.archive.ubuntu.com/ubuntu; ubuntu-updates de.archive.ubuntu.com/ubuntu ; ubuntu-security security.ubuntu.com/ubuntu allowed_hosts=*

Ændre i /etc/default/apt-cacher også:

AUTOSTART=1

Og genstart apt-cacher:

/etc/init.d/apt-cacher restart

Vær opmærksom på at installations serveren hedder 10.1.2.100:3142/ubuntu/ nu

PXE netinstaller

Hent og installer pxelinux til tftp roden:

cd /var/lib/tftpboot/ wget http://archive.ubuntu.com/ubuntu/dists/lucid/main/installer-amd64/current/images/netboot/netboot.tar.gz tar -xvzf netboot.tar.gz

Sæt IOS DHCP serveren op til at dele statiske IP adresser ud og pege på PXE serveren og filen:

ip dhcp pool Pool-VLAN2

network 10.1.2.0 255.255.255.0

bootfile pxelinux.0

next-server 10.1.2.100

default-router 10.1.2.1

dns-server 89.150.129.4 89.150.129.10

lease 0 0 30

!

ip dhcp pool C04-Pool

host 10.1.2.101 255.255.255.0

hardware-address 0021.86f3.caa5

client-name C04

!

ip dhcp pool C05-Pool

host 10.1.2.102 255.255.255.0

hardware-address 0021.86f4.00e9

client-name C05

!

ip dhcp pool C06-Pool

host 10.1.2.103 255.255.255.0

hardware-address 0021.86f4.030d

client-name C06

!

ip dhcp pool C17-Pool

host 10.1.2.104 255.255.255.0

hardware-address 0021.86f4.1d79

client-name C17Skal det isteder være på linux skal man installere dhcp3 og oprette et scope i /etc/dhcp3/dhcpd.conf"

subnet 10.0.0.0 netmask 255.255.255.0 {

range 10.0.0.50 10.0.0.100;

option domain-name-servers 172.16.4.66;

option domain-name "cluster.tekkom.dk";

option routers 10.0.0.1;

default-lease-time 360;

max-lease-time 360;

next-server 10.0.0.1;

filename "pxelinux.0";

}

host node1 {

hardware ethernet 0:0:c0:5d:bd:95;

server-name "node1.cluster.tekkom.dk";

fixed-address 10.0.0.30;

}Lav en F12 og det spiller bare.

MRTG opsætning

Gør som beskrevet her Netband_Project_-_Ubuntu_server

Quick Guide

cfgmaker --no-down --output /etc/mrtg-10.1.2.50.cfg public@10.1.2.50 cfgmaker --no-down --output /etc/mrtg-10.1.2.1.cfg public@10.1.2.1

Ændre lidt i /etc/mrtg.cfg

Options[_]: bits, unknaszero Include: /etc/mrtg-10.1.2.50.cfg Include: /etc/mrtg-10.1.2.1.cfg

Husk at lave WorkDir:

mkdir /var/www/mrtg

Tilføj MRTG til cron sammen med indexmaker, så den selv opdaterer index siden når du tilføjer enheder til /etc/mrtg.cfg

crontab -e

m h dom mon dow command

*/2 * * * * env LANG=C /usr/bin/mrtg /etc/mrtg.cfg --logging /var/log/mrtg/mrtg.log

*/5 * * * * /usr/bin/indexmaker /etc/mrtg.cfg > /var/www/mrtg/index.html

Og så kan man se alle interfaces på http://10.1.2.100/mrtg

Virker det ikke tjek loggen i /var/log/mrtg/mrtg.log

Nagios3

Ændre i /etc/nagios3/conf.d/hostgroups_nagios2.cfg, så under hostgroup_name ping-servers skal members laves om til *

Ændre også i /etc/nagios3/conf.d/host-gateway_nagios3.cfg

define host {

host_name Switch

alias Switch

address 10.1.2.50

use generic-host

}

define host {

host_name C04

alias C04

address 10.1.2.101

use generic-host

}

define host {

host_name C05

alias C05

address 10.1.2.102

use generic-host

}

define host {

host_name C06

alias C06

address 10.1.2.103

use generic-host

}

define host {

host_name C17

alias C17

address 10.1.2.104

use generic-host

}

Vi ændrer også lige disse linier i config filen så der ikke går flere minutter før den opdager en fejl. De skriver det bruger mere CPU og netværk, men jeg er da ligeglad...

/etc/nagios3/nagios.cfg

service_freshness_check_interval=15 interval_length=15 host_inter_check_delay_method=d service_interleave_factor=1 service_inter_check_delay_method=d status_update_interval=10

Genstart Nagios

/etc/init.d/nagios3 restart

Nagios kan nu tilgåes på http://10.1.2.100/nagios3 std brugernavn er nagiosadmin og bruger navn er det du satte under installtionen.

Links

http://www.ubuntugeek.com/nagios-configuration-tools-web-frontends-or-gui.html

Auto SSH login

På C01 generere man nogle nøgler som kan bruges til at logge ind på alle maskinerne med.

ssh-keygen -t dsa

Kopier vores public key ind i authorized_keys, så vi kan SSH til os selv

cat .ssh/id_dsa.pub >> .ssh/authorized_keys

Så opretter man en .ssh folder i /root eller /home dir for den bruger man lyster.

ssh 10.1.2.101 mkdir .ssh ssh 10.1.2.102 mkdir .ssh ssh 10.1.2.103 mkdir .ssh ssh 10.1.2.104 mkdir .ssh

Kopierer Certifikaterne over på dem:

scp .ssh/* 10.1.2.101:.ssh/ scp .ssh/* 10.1.2.102:.ssh/ scp .ssh/* 10.1.2.103:.ssh/ scp .ssh/* 10.1.2.104:.ssh/

Og man kan nu SSH rundt til alle maskiner uden password

ssh 10.1.2.101

Auto SSH Bruger Login

Vil man gerne undgå at bruge brugernavn/pass kan man generere en Priv/Pub keypair med puttygen og smide den public key den laver ind i .ssh/autorized_keys

NFS server

Lav en folder til at exportere med NFS

mkdir /var/mirror

Tilføj den til /etc/exports

/var/mirror *(rw,sync,no_subtree_check)

Og genstart NFS serveren

/etc/init.d/nfs-kernel-server restart

Node installation

Manuel installation

Maskiner køres op i hånden gennem PXE boot og hånd installeres.

Installation af nodes kan gøre med

ssh <ip> aptitude -y install nmap screen bmon iperf bonnie++ lmbench lm-sensors snmpd snmp build-essential gcc openssh-client nfs-common mpich2

Og så tilføj /var/mirror til /etc/fstab

c01:/var/mirror/ /var/mirror nfs rw 0 0

Automount /home

Hvis man gerne vil have brugerrettigheder med over NFS når man mounter skal man ændre lidt i nogle filer.

On ubuntu, I edited /etc/idmapd.conf and changed Domain to be the same value as what I set for NFSMAPID_DOMAIN, edit /etc/default/nfs-common and set NEED_STATD=no and NEED_IDMAPD=yes, then service rpc_pipefs restart and service idmapd restart, remount your nfs shares (in my case, service autofs restart); and all the uid/gid mapping should now be correct.

NewClusterH:/home/ /home nfs rw 0 0

Automastisk installation

Maskinerne kan også installeres efter en kickstart seed fil.

For at køre maskinerne op med seed filen kan den ligge på en webserver og henvises til igennem bootscreen installations menuen.

nano /var/lib/tftpboot/ubuntu-installer/amd64/boot-screens/text.cfg

label kickstart

menu label ^Kickstart

kernel ubuntu-installer/amd64/linux

append ks=http://10.1.2.100/ks.cfg vga=normal initrd=ubuntu-installer/amd64/initrd.gz -- quiet

Og kickstart filen vil se sådan ud:

#Generated by Kickstart Configurator #platform=AMD64 or Intel EM64T #Vælger hvilket sprog der skal installeres på #System language lang en_US #Language modules to install langsupport en_US #Definerer hvilket tastatur vi bruger #System keyboard keyboard dk #System mouse mouse #Tidszonen vi er i #System timezone timezone Europe/Copenhagen #Root password = cisco #Root password rootpw --iscrypted $1$T5Fz4QeH$sY307XHHaNCfxL4ODXNyG. #Vi har defineret at der ikke skal oprettes en alm. bruger. #Initial user user --disabled #Reboot after installation reboot #Use text mode install text #Install OS instead of upgrade install #Hvilken source skal vi installere fra, her er det vores apt-cacher server #Use Web installation url --url http://10.1.2.100:3142/ubuntu/ #indstallerer en bootloader. #System bootloader configuration bootloader --location=mbr #Clear the Master Boot Record zerombr yes #Rykker disken rundt!!! #Partition clearing information clearpart --all --initlabel #Opretter en swap disk på anbefaler størrelse og bruger resten til en ext4 partition / #Disk partition information part swap --recommended part / --fstype ext4 --size 1 --grow #Selvfølgelig skal vi have shadow password og md5 #System authorization infomation auth --useshadow --enablemd5 #Firewall configuration firewall --disabled #Jeg vil da ikke have desktop på en server. #Do not configure the X Window System skipx #Her definerer vi de pakker der skal installeres, er det til desktop så kunne ubuntu-desktop være et bud %packages openssh-server nmap screen bmon iperf bonnie++ lmbench lm-sensors snmpd snmp build-essential gcc openssh-client nfs-common mpich2

Cluster installation

Host filer

opret alle nodes i /etc/hosts:

127.0.0.1 localhost 10.1.2.100 C01 10.1.2.101 C04 10.1.2.102 C05 10.1.2.103 C06 10.1.2.104 C17

Lav et script der hedder CopyToClients.sh

#!/bin/bash scp $1 10.1.2.101:$2 scp $1 10.1.2.102:$2 scp $1 10.1.2.103:$2 scp $1 10.1.2.104:$2

Smid den rundt til alle de andre nodes med:

./CopyToClients.sh /etc/hosts /etc/hosts

Installer MPICH2

Installer MPICH2 på alle maskiner

aptitude install mpich2

Lav en fil i home dir der hedder mpd.hosts der indeholder:

C01 C04 C05 C06 C17

Og lav et password i filen /etc/mpd.conf

echo MPD_SECRETWORD=cisco >> /etc/mpd.conf chmod 600 /etc/mpd.conf

Den skal ligge i home dir, hvis man er andre brugere end root!!

Flyt den til de andre maskiner

./CopyToClients.sh /etc/mpd.conf /etc/mpd.conf

Start MPD på alle nodes

mpdboot -n 4

Og test at det virker, skulle gerne returnere hostnavn på alle nodes

mpdtrace

Test fil i /var/mirror/test.sh

#!/bin/bash cat /etc/hostname

Kald den

mpiexec -n 40 /var/mirror/test.sh

Have Fun

mpiexec -n 1000 /var/mirror/test.sh | sort | uniq -c

Sluk for MPD igen

mpdallexit

Installer OpenMPI

Skal selvfølgelig installeres på alle maskiner

install libopenmpi-dev openmpi-bin openmpi-doc

Eksekver et program

mpicc /var/mirror/MPI_Hello.c -o /var/mirror/MPI_Hello mpiexec -n 6 -hostfile mpd.hosts /var/mirror/MPI_Hello Hello World from process 3, of 6 on Cl1N0 Hello World from process 0, of 6 on Cl1N0 Hello World from process 2, of 6 on Cl1N2 Hello World from process 5, of 6 on Cl1N2 Hello World from process 1, of 6 on Cl1N1 Hello World from process 4, of 6 on Cl1N1

http://auriza.site40.net/notes/mpi/openmpi-on-ubuntu-904/

Installer Torque

Torque er den scheduler de fleste HPC bruger. Man smider sine jobs til den og så klarer den at dele dem ud til nødvendige maskiner og når et job er færdig sender den det næste afsted.

Links

- Installation of Torque/Maui for a Beowulf Cluster

- Community Surface Dynamics Modeling System Wiki

- TORQUE RESOURCE MANAGER

Andre Scheduler

- Loadleveler

- LSF

- Sun Grid Engine

Notater

Tilføj torque server til /etc/hosts

127.0.0.1 torqueserver

installer pakker

aptitude install torque-client torque-common torque-mom torque-scheduler torque-server

Stop torque

qterm

Programmering med MPI

http://www.lam-mpi.org/tutorials/one-step/ezstart.php

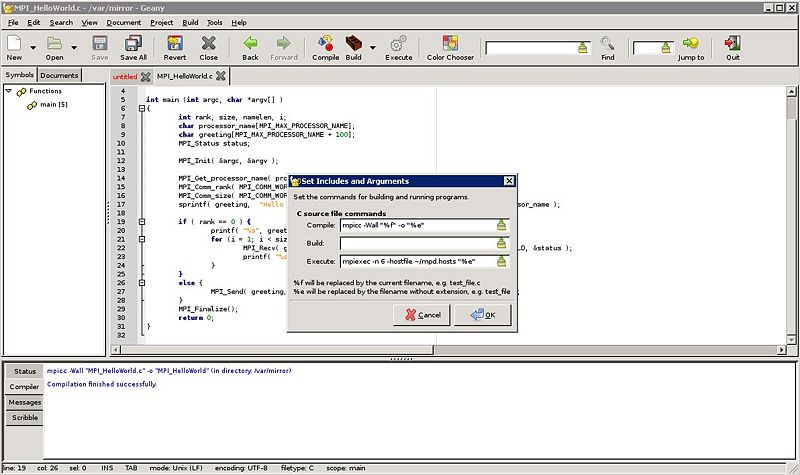

Geany IDE

Der findes et IDE man kan tilrette til cluster kompilering og eksekvering kaldet geany. Installation:

aptitude install geany

For at kompilere det med mpicc og eksekvere med mpiexec skal man lige rette lidt til under "Build > Set Includes and Arguments".

mpicc -Wall "%f" -o "%e" mpiexec -n 20 -hostfile ~/mpd.hosts "%e"

Og til C++ bruger man mpic++ -Wall "%f" -o "%e"

Programmet man skriver skal selvfølgelig ligge i /var/mirror for de andre noder også har adgang til det.

Og den kan man selvfølgelig X'e over med

env DISPLAY=172.16.4.105:1 geany

Hello World eksempel

HelloWorld program

#include "mpi.h"

#include <stdio.h>

int main (int argc, char *argv[] )

{

int rank, size, namelen;

char processor_name[MPI_MAX_PROCESSOR_NAME];

MPI_Init( &argc, &argv );

MPI_Get_processor_name( processor_name, &namelen );

MPI_Comm_rank( MPI_COMM_WORLD, &rank );

MPI_Comm_size( MPI_COMM_WORLD, &size );

printf( "Hello World from process %d, of %d on %s\n", rank, size, processor_name );

MPI_Finalize();

return 0;

}

Compile det med

mpicxx -o helloWorld helloWorld.c

og kør det med

mpiexec -n 10 /var/mirror/helloWorld

Resultatet skulle gerne blive

Hello World from process 2, of 10 on C05 Hello World from process 6, of 10 on C05 Hello World from process 3, of 10 on C06 Hello World from process 1, of 10 on C04 Hello World from process 5, of 10 on C04 Hello World from process 7, of 10 on C06 Hello World from process 9, of 10 on C04 Hello World from process 0, of 10 on C01 Hello World from process 4, of 10 on C01 Hello World from process 8, of 10 on C01

Hello World med MPI

Det forrige eksempel brugte ikke rigtig MPI til noget, ud over at få rank og size.

Hvis vi skal lave det om til at rank 0 er den der printer til skærmen og alle de andre sender via MPI til den, ville det se sådan ud:

#include "mpi.h"

#include <stdio.h>

#include <string.h>

int main (int argc, char *argv[] )

{

int rank, size, namelen, i;

char processor_name[MPI_MAX_PROCESSOR_NAME];

char greeting[MPI_MAX_PROCESSOR_NAME + 100];

MPI_Status status;

MPI_Init( &argc, &argv );

MPI_Get_processor_name( processor_name, &namelen );

MPI_Comm_rank( MPI_COMM_WORLD, &rank );

MPI_Comm_size( MPI_COMM_WORLD, &size );

sprintf( greeting, "Hello World from process %d, of %d on %s\n", rank, size, processor_name );

if ( rank == 0 ) {

printf( "%s", greeting );

for (i = 1; i < size; i++ ) {

MPI_Recv( greeting, sizeof( greeting ), MPI_CHAR, i, 1, MPI_COMM_WORLD, &status );

printf( "%s", greeting );

}

}

else {

MPI_Send( greeting, strlen( greeting ) + 1, MPI_CHAR, 0, 1, MPI_COMM_WORLD );

}

MPI_Finalize();

return 0;

}

Hvilket returnenrer:

0: Hello World from process 0, of 10 on C01 0: Hello World from process 1, of 10 on C04 0: Hello World from process 2, of 10 on C05 0: Hello World from process 3, of 10 on C06 0: Hello World from process 4, of 10 on C01 0: Hello World from process 5, of 10 on C04 0: Hello World from process 6, of 10 on C05 0: Hello World from process 7, of 10 on C06 0: Hello World from process 8, of 10 on C01 0: Hello World from process 9, of 10 on C04

MPI PingPong program

PingPong programmet er lavet for at benchmarke MPI på forskellige typer netværk.

Kode

/* pong.c Generic Benchmark code

* Dave Turner - Ames Lab - July of 1994+++

*

* Most Unix timers can't be trusted for very short times, so take this

* into account when looking at the results. This code also only times

* a single message passing event for each size, so the results may vary

* between runs. For more accurate measurements, grab NetPIPE from

* http://www.scl.ameslab.gov/ .

*/

#include "mpi.h"

#include <stdio.h>

#include <stdlib.h>

int main (int argc, char **argv)

{

int myproc, size, other_proc, nprocs, i, last;

double t0, t1, time;

double *a, *b;

double max_rate = 0.0, min_latency = 10e6;

MPI_Request request, request_a, request_b;

MPI_Status status;

#if defined (_CRAYT3E)

a = (double *) shmalloc (132000 * sizeof (double));

b = (double *) shmalloc (132000 * sizeof (double));

#else

a = (double *) malloc (132000 * sizeof (double));

b = (double *) malloc (132000 * sizeof (double));

#endif

for (i = 0; i < 132000; i++) {

a[i] = (double) i;

b[i] = 0.0;

}

MPI_Init(&argc, &argv);

MPI_Comm_size(MPI_COMM_WORLD, &nprocs);

MPI_Comm_rank(MPI_COMM_WORLD, &myproc);

if (nprocs != 2) exit (1);

other_proc = (myproc + 1) % 2;

printf("Hello from %d of %d\n", myproc, nprocs);

MPI_Barrier(MPI_COMM_WORLD);

/* Timer accuracy test */

t0 = MPI_Wtime();

t1 = MPI_Wtime();

while (t1 == t0) t1 = MPI_Wtime();

if (myproc == 0)

printf("Timer accuracy of ~%f usecs\n\n", (t1 - t0) * 1000000);

/* Communications between nodes

* - Blocking sends and recvs

* - No guarantee of prepost, so might pass through comm buffer

*/

for (size = 8; size <= 1048576; size *= 2) {

for (i = 0; i < size / 8; i++) {

a[i] = (double) i;

b[i] = 0.0;

}

last = size / 8 - 1;

MPI_Barrier(MPI_COMM_WORLD);

t0 = MPI_Wtime();

if (myproc == 0) {

MPI_Send(a, size/8, MPI_DOUBLE, other_proc, 0, MPI_COMM_WORLD);

MPI_Recv(b, size/8, MPI_DOUBLE, other_proc, 0, MPI_COMM_WORLD, &status);

} else {

MPI_Recv(b, size/8, MPI_DOUBLE, other_proc, 0, MPI_COMM_WORLD, &status);

b[0] += 1.0;

if (last != 0)

b[last] += 1.0;

MPI_Send(b, size/8, MPI_DOUBLE, other_proc, 0, MPI_COMM_WORLD);

}

t1 = MPI_Wtime();

time = 1.e6 * (t1 - t0);

MPI_Barrier(MPI_COMM_WORLD);

if ((b[0] != 1.0 || b[last] != last + 1)) {

printf("ERROR - b[0] = %f b[%d] = %f\n", b[0], last, b[last]);

exit (1);

}

for (i = 1; i < last - 1; i++)

if (b[i] != (double) i)

printf("ERROR - b[%d] = %f\n", i, b[i]);

if (myproc == 0 && time > 0.000001) {

printf(" %7d bytes took %9.0f usec (%8.3f MB/sec)\n",

size, time, 2.0 * size / time);

if (2 * size / time > max_rate) max_rate = 2 * size / time;

if (time / 2 < min_latency) min_latency = time / 2;

} else if (myproc == 0) {

printf(" %7d bytes took less than the timer accuracy\n", size);

}

}

/* Async communications

* - Prepost receives to guarantee bypassing the comm buffer

*/

MPI_Barrier(MPI_COMM_WORLD);

if (myproc == 0) printf("\n Asynchronous ping-pong\n\n");

for (size = 8; size <= 1048576; size *= 2) {

for (i = 0; i < size / 8; i++) {

a[i] = (double) i;

b[i] = 0.0;

}

last = size / 8 - 1;

MPI_Irecv(b, size/8, MPI_DOUBLE, other_proc, 0, MPI_COMM_WORLD, &request);

MPI_Barrier(MPI_COMM_WORLD);

t0 = MPI_Wtime();

if (myproc == 0) {

MPI_Send(a, size/8, MPI_DOUBLE, other_proc, 0, MPI_COMM_WORLD);

MPI_Wait(&request, &status);

} else {

MPI_Wait(&request, &status);

b[0] += 1.0;

if (last != 0)

b[last] += 1.0;

MPI_Send(b, size/8, MPI_DOUBLE, other_proc, 0, MPI_COMM_WORLD);

}

t1 = MPI_Wtime();

time = 1.e6 * (t1 - t0);

MPI_Barrier(MPI_COMM_WORLD);

if ((b[0] != 1.0 || b[last] != last + 1))

printf("ERROR - b[0] = %f b[%d] = %f\n", b[0], last, b[last]);

for (i = 1; i < last - 1; i++)

if (b[i] != (double) i)

printf("ERROR - b[%d] = %f\n", i, b[i]);

if (myproc == 0 && time > 0.000001) {

printf(" %7d bytes took %9.0f usec (%8.3f MB/sec)\n",

size, time, 2.0 * size / time);

if (2 * size / time > max_rate) max_rate = 2 * size / time;

if (time / 2 < min_latency) min_latency = time / 2;

} else if (myproc == 0) {

printf(" %7d bytes took less than the timer accuracy\n", size);

}

}

/* Bidirectional communications

* - Prepost receives to guarantee bypassing the comm buffer

*/

MPI_Barrier(MPI_COMM_WORLD);

if (myproc == 0) printf("\n Bi-directional asynchronous ping-pong\n\n");

for (size = 8; size <= 1048576; size *= 2) {

for (i = 0; i < size / 8; i++) {

a[i] = (double) i;

b[i] = 0.0;

}

last = size / 8 - 1;

MPI_Irecv(b, size/8, MPI_DOUBLE, other_proc, 0, MPI_COMM_WORLD, &request_b);

MPI_Irecv(a, size/8, MPI_DOUBLE, other_proc, 0, MPI_COMM_WORLD, &request_a);

MPI_Barrier(MPI_COMM_WORLD);

t0 = MPI_Wtime();

MPI_Send(a, size/8, MPI_DOUBLE, other_proc, 0, MPI_COMM_WORLD);

MPI_Wait(&request_b, &status);

b[0] += 1.0;

if (last != 0)

b[last] += 1.0;

MPI_Send(b, size/8, MPI_DOUBLE, other_proc, 0, MPI_COMM_WORLD);

MPI_Wait(&request_a, &status);

t1 = MPI_Wtime();

time = 1.e6 * (t1 - t0);

MPI_Barrier(MPI_COMM_WORLD);

if ((a[0] != 1.0 || a[last] != last + 1))

printf("ERROR - a[0] = %f a[%d] = %f\n", a[0], last, a[last]);

for (i = 1; i < last - 1; i++)

if (a[i] != (double) i)

printf("ERROR - a[%d] = %f\n", i, a[i]);

if (myproc == 0 && time > 0.000001) {

printf(" %7d bytes took %9.0f usec (%8.3f MB/sec)\n",

size, time, 2.0 * size / time);

if (2 * size / time > max_rate) max_rate = 2 * size / time;

if (time / 2 < min_latency) min_latency = time / 2;

} else if (myproc == 0) {

printf(" %7d bytes took less than the timer accuracy\n", size);

}

}

if (myproc == 0)

printf("\n Max rate = %f MB/sec Min latency = %f usec\n",

max_rate, min_latency);

MPI_Finalize();

return 0;

}

100mbit/s netværk

Hello from 0 of 2

Hello from 1 of 2

Timer accuracy of ~0.953674 usecs

8 bytes took 353 usec ( 0.045 MB/sec)

16 bytes took 268 usec ( 0.119 MB/sec)

32 bytes took 245 usec ( 0.261 MB/sec)

64 bytes took 274 usec ( 0.467 MB/sec)

128 bytes took 281 usec ( 0.911 MB/sec)

256 bytes took 245 usec ( 2.091 MB/sec)

512 bytes took 300 usec ( 3.414 MB/sec)

1024 bytes took 403 usec ( 5.083 MB/sec)

2048 bytes took 628 usec ( 6.522 MB/sec)

4096 bytes took 873 usec ( 9.383 MB/sec)

8192 bytes took 1597 usec ( 10.258 MB/sec)

16384 bytes took 3188 usec ( 10.278 MB/sec)

32768 bytes took 5866 usec ( 11.172 MB/sec)

65536 bytes took 11476 usec ( 11.421 MB/sec)

131072 bytes took 23561 usec ( 11.126 MB/sec)

262144 bytes took 45460 usec ( 11.533 MB/sec)

524288 bytes took 93597 usec ( 11.203 MB/sec)

1048576 bytes took 179155 usec ( 11.706 MB/sec)

Asynchronous ping-pong

8 bytes took 256 usec ( 0.063 MB/sec)

16 bytes took 277 usec ( 0.116 MB/sec)

32 bytes took 259 usec ( 0.247 MB/sec)

64 bytes took 271 usec ( 0.472 MB/sec)

128 bytes took 290 usec ( 0.882 MB/sec)

256 bytes took 284 usec ( 1.802 MB/sec)

512 bytes took 300 usec ( 3.414 MB/sec)

1024 bytes took 345 usec ( 5.936 MB/sec)

2048 bytes took 679 usec ( 6.032 MB/sec)

4096 bytes took 856 usec ( 9.571 MB/sec)

8192 bytes took 1609 usec ( 10.182 MB/sec)

16384 bytes took 3177 usec ( 10.314 MB/sec)

32768 bytes took 5837 usec ( 11.228 MB/sec)

65536 bytes took 11531 usec ( 11.367 MB/sec)

131072 bytes took 23597 usec ( 11.109 MB/sec)

262144 bytes took 45400 usec ( 11.548 MB/sec)

524288 bytes took 89853 usec ( 11.670 MB/sec)

1048576 bytes took 179022 usec ( 11.715 MB/sec)

Bi-directional asynchronous ping-pong

8 bytes took 246 usec ( 0.065 MB/sec)

16 bytes took 249 usec ( 0.129 MB/sec)

32 bytes took 247 usec ( 0.259 MB/sec)

64 bytes took 250 usec ( 0.512 MB/sec)

128 bytes took 251 usec ( 1.021 MB/sec)

256 bytes took 249 usec ( 2.057 MB/sec)

512 bytes took 300 usec ( 3.411 MB/sec)

1024 bytes took 345 usec ( 5.936 MB/sec)

2048 bytes took 660 usec ( 6.207 MB/sec)

4096 bytes took 854 usec ( 9.592 MB/sec)

8192 bytes took 1692 usec ( 9.683 MB/sec)

16384 bytes took 3148 usec ( 10.409 MB/sec)

32768 bytes took 5913 usec ( 11.083 MB/sec)

65536 bytes took 13798 usec ( 9.499 MB/sec)

131072 bytes took 27642 usec ( 9.484 MB/sec)

262144 bytes took 72140 usec ( 7.268 MB/sec)

524288 bytes took 146771 usec ( 7.144 MB/sec)

1048576 bytes took 298708 usec ( 7.021 MB/sec)

Max rate = 11.714504 MB/sec Min latency = 122.427940 usec

1Gbit/s netværk

Hello from 0 of 2

Hello from 1 of 2

Timer accuracy of ~1.192093 usecs

8 bytes took 169 usec ( 0.095 MB/sec)

16 bytes took 158 usec ( 0.202 MB/sec)

32 bytes took 162 usec ( 0.395 MB/sec)

64 bytes took 151 usec ( 0.848 MB/sec)

128 bytes took 158 usec ( 1.620 MB/sec)

256 bytes took 158 usec ( 3.239 MB/sec)

512 bytes took 165 usec ( 6.207 MB/sec)

1024 bytes took 193 usec ( 10.605 MB/sec)

2048 bytes took 226 usec ( 18.122 MB/sec)

4096 bytes took 233 usec ( 35.133 MB/sec)

8192 bytes took 321 usec ( 51.055 MB/sec)

16384 bytes took 565 usec ( 57.991 MB/sec)

32768 bytes took 1410 usec ( 46.479 MB/sec)

65536 bytes took 2577 usec ( 50.861 MB/sec)

131072 bytes took 3138 usec ( 83.537 MB/sec)

262144 bytes took 5585 usec ( 93.875 MB/sec)

524288 bytes took 10363 usec ( 101.186 MB/sec)

1048576 bytes took 19895 usec ( 105.411 MB/sec)

Asynchronous ping-pong

8 bytes took 254 usec ( 0.063 MB/sec)

16 bytes took 131 usec ( 0.244 MB/sec)

32 bytes took 129 usec ( 0.496 MB/sec)

64 bytes took 131 usec ( 0.976 MB/sec)

128 bytes took 129 usec ( 1.985 MB/sec)

256 bytes took 136 usec ( 3.768 MB/sec)

512 bytes took 141 usec ( 7.267 MB/sec)

1024 bytes took 157 usec ( 13.035 MB/sec)

2048 bytes took 151 usec ( 27.140 MB/sec)

4096 bytes took 206 usec ( 39.768 MB/sec)

8192 bytes took 293 usec ( 55.915 MB/sec)

16384 bytes took 438 usec ( 74.817 MB/sec)

32768 bytes took 921 usec ( 71.157 MB/sec)

65536 bytes took 1538 usec ( 85.220 MB/sec)

131072 bytes took 3050 usec ( 85.946 MB/sec)

262144 bytes took 5217 usec ( 100.495 MB/sec)

524288 bytes took 9749 usec ( 107.558 MB/sec)

1048576 bytes took 18777 usec ( 111.688 MB/sec)

Bi-directional asynchronous ping-pong

8 bytes took 191 usec ( 0.084 MB/sec)

16 bytes took 137 usec ( 0.233 MB/sec)

32 bytes took 123 usec ( 0.520 MB/sec)

64 bytes took 123 usec ( 1.040 MB/sec)

128 bytes took 129 usec ( 1.985 MB/sec)

256 bytes took 123 usec ( 4.162 MB/sec)

512 bytes took 122 usec ( 8.389 MB/sec)

1024 bytes took 125 usec ( 16.393 MB/sec)

2048 bytes took 172 usec ( 23.828 MB/sec)

4096 bytes took 205 usec ( 39.953 MB/sec)

8192 bytes took 306 usec ( 53.520 MB/sec)

16384 bytes took 488 usec ( 67.142 MB/sec)

32768 bytes took 988 usec ( 66.332 MB/sec)

65536 bytes took 1694 usec ( 77.376 MB/sec)

131072 bytes took 4879 usec ( 53.729 MB/sec)

262144 bytes took 8126 usec ( 64.520 MB/sec)

524288 bytes took 15152 usec ( 69.204 MB/sec)

1048576 bytes took 29886 usec ( 70.172 MB/sec)

Max rate = 111.687910 MB/sec Min latency = 61.035156 usec

Konklusion

Hurtige netværk = hurtigere overførsel, og så skal man ikke sende for mange små pakker på ethernet

Matrix program med C++

For at kunne kompilere den skal man have installeret g++ og så skal den kompileres med mpiCC

// matrixstats.cpp

// Written by Drew Weitz

// Last Modified: June 9, 2003

// This program is intended to be a very basic introduction to MPI programming.

// For informationa bout each specific function call, consult the man pages

// available on the cluster, or check out information available online at

// www.iitk.ac.in/cc/param/mpi_calls.html

// to include the MPI function library

#include "mpi.h"

#include <stdio.h>

#include <string>

int main (int argc, char *argv[]) {

// these variables will hold MPI specific information.

// numprocs will store the total number of processors allocated

// for the program run; myrank will store each individual procssors

// number assigned by the master.

int numprocs, myrank;

// In general, all nodes have a copy of all the variables defined.

// MPI_Init prepares the program run to communicate between all the

// nodes. It is necessary to have this function call in all MPI

// code.

MPI_Init(&argc, &argv);

// MPI_Comm_size initializes the numprocs variable to be the number

// of processors alloocated to the program run. MPI_COMM_WORLD is

// a macro that MPI uses to address the correct MPI netowrk. Since

// multiple MPI jobs could be running at once, we want to have a way

// of addressing only the processors in our program run (or our

// "world").

MPI_Comm_size(MPI_COMM_WORLD, &numprocs);

// MPI_Comm_rank initializes each nodes myrank variable to be it's

// processor number.

MPI_Comm_rank(MPI_COMM_WORLD, &myrank);

MPI_Status status;

char greeting[MPI_MAX_PROCESSOR_NAME + 80];

int thematrix[numprocs-1][numprocs-1];

int thecube[numprocs-1][numprocs-1][numprocs-1];

int resultmatrix[numprocs-1][numprocs-1];

int temp = 0;

if (myrank == 0) {

printf("Preparing the matrix...\n");

for (int i=0; i < numprocs-1; i++) {

for (int j=0; j < numprocs-1; j++) {

thematrix[i][j] = 0;

}

}

printf("The matrix is:\n");

for (int i=0; i < numprocs-1; i++) {

for (int j=0; j < numprocs-1; j++) {

printf("%i\t", thematrix[i][j]);

}

printf("\n");

}

printf("Sending the matrix to the other nodes...\n");

for (int k=1; k < numprocs; k++) {

// MPI_Send is the function call that sends the values of one nodes'

// variable out to another node. In effect, it is the way in which

// data can be passed to another node. A node can either receive this

// "message" (via MPI_RECV), or it can be ignored.

MPI_Send(thematrix, (numprocs-1)*(numprocs-1),

MPI_INT, k, 1,

MPI_COMM_WORLD);

}

printf("Waiting for responses...\n");

for (int k=1; k < numprocs; k++) {

// MPI_Recv is the compliament to MPI_Send. This is one way

// in which the processor will receive data. In the case of

// MPI_Recv, the processor will stop executing it's code and

// wait for the expected message. If you do not want the processor

// to stop executing code while waiting for a "message", you must

// use a "non-blocking" receive function. See the website listed

// at the tope of the program for more information.

MPI_Recv(thematrix, (numprocs-1)*(numprocs-1),

MPI_INT, k, 1,

MPI_COMM_WORLD, &status);

for (int i=0; i < numprocs-1; i++) {

for (int j=0; j < numprocs-1; j++) {

thecube[k-1][i][j] = thematrix[i][j];

}

}

}

printf("The result is...\n");

for (int k=0; k < numprocs-1; k++) {

printf("Level %i:\n", k);

for (int i=0; i < numprocs-1; i++) {

for (int j=0; j < numprocs-1; j++) {

printf("%i\t", thecube[k][i][j]);

}

printf("\n");

}

}

printf("Collapsing results...\n");

for (int i=0; i < numprocs-1; i++) {

for (int j=0; j < numprocs-1; j++) {

for (int k=0; k < numprocs -1; k++) {

temp = temp + thecube [k][i][j];

}

resultmatrix[i][j] = temp;

temp = 0;

}

}

for (int i=0; i < numprocs-1; i++) {

for (int j=0; j < numprocs-1; j++) {

printf("%i\t", resultmatrix[i][j]);

}

printf("\n");

}

}

else {

MPI_Recv(thematrix, (numprocs-1)*(numprocs-1), MPI_INT, 0, 1,

MPI_COMM_WORLD, &status);

sprintf(greeting, "matrix received...");

for (int j=0; j < numprocs-1; j++) {

thematrix[myrank-1][j] = myrank*j;

}

MPI_Send(thematrix, (numprocs-1)*(numprocs-1), MPI_INT, 0, 1,

MPI_COMM_WORLD);

}

// MPI_FINALIZE closes all the connections opened during the MPI

// program run. It is necessary for MPI Programs.

MPI_Finalize();

return 0;

}

Cluster Management

Små scripts til at udføre handlinger på clienterne

Shutdown af nodes

ShutdownClients.sh

#!/bin/bash ssh 10.1.2.101 shutdown -h now & ssh 10.1.2.102 shutdown -h now & ssh 10.1.2.103 shutdown -h now & ssh 10.1.2.104 shutdown -h now &

Wake On Lan af nodes

WakeClients.sh

wakeonlan 00:21:86:f3:ca:a5 wakeonlan 00:21:86:f4:00:e9 wakeonlan 00:21:86:f4:03:0d wakeonlan 00:21:86:f4:1d:79

Mount NFS mirror på nodes

MountMirrorOnClients.sh

#!/bin/bash ssh 10.1.2.101 mount c01:/var/mirror /var/mirror ssh 10.1.2.102 mount c01:/var/mirror /var/mirror ssh 10.1.2.103 mount c01:/var/mirror /var/mirror ssh 10.1.2.104 mount c01:/var/mirror /var/mirror

Opdater alle maskiner

updateAllMachines.sh

#!/bin/bash dsh -M -q -c -f mpd.hosts aptitude update dsh -M -q -c -f mpd.hosts aptitude -y upgrade