Difference between revisions of "CentOS cluster computing"

m (New page: Redhat Clustering = == Abbreviations and systems == {|border=1 ;style="margin: 0 auto; text-align: center;cellpadding="5" cellspacing="0" |+ Redhat Clustering daemons and systems |- bgcol...) |

m (→Backup technologies) |

||

| (65 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

| − | + | = Which services are available with RedHAT/Centos Clustering = | |

| − | == Abbreviations and systems | + | == High availability == |

| + | *rgmanager (Relocates services from one node to another node in case of malfunction) | ||

| + | |||

| + | = Abbreviations and systems = | ||

{|border=1 ;style="margin: 0 auto; text-align: center;cellpadding="5" cellspacing="0" | {|border=1 ;style="margin: 0 auto; text-align: center;cellpadding="5" cellspacing="0" | ||

|+ Redhat Clustering daemons and systems | |+ Redhat Clustering daemons and systems | ||

| Line 20: | Line 23: | ||

| [[GNDB]] || Global Network Block Device. Low level storage access over Ethernet || GFS server | | [[GNDB]] || Global Network Block Device. Low level storage access over Ethernet || GFS server | ||

|- | |- | ||

| − | | [[LVS]] || [http://www.linuxvirtualserver.org/ Linux Virtual Server], routing software to | + | | [[LVS]] || [http://www.linuxvirtualserver.org/ Linux Virtual Server], routing software to provide IP load balancing || On two or more Linux gateways |

| + | |- | ||

| + | | [[CentOS cluster computing#RHCS|RHCS]] || RedHat Cluster Suite - Software components to build various types of Clusters || | ||

| + | |- | ||

|} | |} | ||

| + | === Quorum === | ||

| + | *Quorum: The minimum number of people in a organization to conduct business or in this case the minimum number of resources available to keep the cluster running. | ||

| + | :In Redhat/Centos Clustering Quorum is when more than 50% of the votes are available. In a standard setting each node of a cluster have one vote. If you Cluster consists of eight nodes, the Cluster will first be available when five nodes are tied together in the Cluster. See the current operational status with '''cman_tool status''' command.<br/> | ||

| + | :This rule is necessary for example in the case we have eight nodes and a network error cuts the cluster in half, we would suddenly have two clusters with four nodes. The two Clusters would destroy the integrity of databases and storage.<br/> | ||

| + | |||

| + | <span id="RHCS"></span> | ||

| + | |||

= Red Hat Cluster Suite Introduction = | = Red Hat Cluster Suite Introduction = | ||

Red Hat Cluster Suite (RHCS) is an integrated set of software components that can be deployed in a variety of configurations to suit your needs for performance, high-availability, load balancing, scalability, file sharing, and economy. | Red Hat Cluster Suite (RHCS) is an integrated set of software components that can be deployed in a variety of configurations to suit your needs for performance, high-availability, load balancing, scalability, file sharing, and economy. | ||

| Line 35: | Line 48: | ||

* Cluster Logical Volume Manager (CLVM) — Provides volume management of cluster storage. | * Cluster Logical Volume Manager (CLVM) — Provides volume management of cluster storage. | ||

* Global Network Block Device (GNBD) — An ancillary component of GFS that exports block-level storage to Ethernet. This is an economical way to make block-level storage available to Red Hat GFS. | * Global Network Block Device (GNBD) — An ancillary component of GFS that exports block-level storage to Ethernet. This is an economical way to make block-level storage available to Red Hat GFS. | ||

| − | = | + | = Cluster management with Conga = |

| + | |||

| + | == Starting luci and ricci == | ||

| + | Follow the instructions in chapter 3 - Configuring Red Hat Cluster With | ||

| + | Conga - in [http://mars.tekkom.dk/sw/RedHat52/Cluster_Administration.pdf RedHat 5.2 Cluster Administration] manual. | ||

| + | |||

| + | = Cluster management from the console = | ||

| + | == Cluster subsystems == | ||

| + | There are quite a few subsystems and daemons. See the picture below | ||

| + | [[image:cman-new.png|none|300px|thumb| CMAN new model, from the aiscman tutorial.]] | ||

| + | ===cman - Cluster Manager=== | ||

| + | ''cman'' consists in CMAN version 2 of [http://openais.org OpenAIS] See the [[media:aiscman.pdf |aiscman tutorial]] | ||

| + | *aisexec - The actual kernel manager. Communicates among the nodes using a ''token'' | ||

| + | *cman_tool - Cluster Management Tool (join,leave,status etc.) | ||

| + | *The ''service cman status'' starts the cluster manager services. | ||

| + | ===ccs - Cluster Configuration System === | ||

| + | *''ccsd'' - daemon that manages the '''cluster.conf''' file in a cman cluster. | ||

| + | *ccs_tool | ||

| + | ===clvmd - Cluster LVM daemon === | ||

| + | clvmd is the daemon that distributes LVM metadata updates around a cluster. It must be | ||

| + | running on all nodes in the cluster and will give an error if a node in the cluster does | ||

| + | not have this daemon running. | ||

| + | |||

| + | =cluster.conf configuration file= | ||

| + | See [[CentOS Cluster Configuration]]article | ||

| + | |||

| + | =notes= | ||

| + | ==Services== | ||

| + | === ricci=== | ||

| + | === ccsd - Cluster Configuration System Daemon=== | ||

| + | |||

| + | === luci === | ||

| + | === cman === | ||

| + | === rgmanager - Resource Group Manager === | ||

| + | The rgmanager manages and provides failover capabilities for collections of resources called services, resource groups, or resource trees in a cluster. See [http://sources.redhat.com/cluster/wiki/RGManager RedHAT rgmanager]<br/> | ||

| + | Remember to enable this service | ||

| + | <pre> | ||

| + | chkconfig --level 345 rgmanager on | ||

| + | service rgmanager start | ||

| + | </pre> | ||

| + | This manager actually runs the '''clurgmgrd''' (Cluster Resource Group Manager Daemon) | ||

| + | |||

| + | === groupd === | ||

| + | ''groupd'' - the group manager for ''fenced'', ''dlm_controld'' and ''gfs_controld'' | ||

| + | *use the ''group_tool'' command to see status. | ||

| + | ==== fenced ==== | ||

| + | The fencing daemon, fenced, fences cluster nodes that have failed. Fencing a node generally means rebooting it or otherwise preventing it from writing to storage, for example disabling it's port on a SAN switch. | ||

| + | ==== DLM - Distributed Lock Manager ==== | ||

| + | *The daemon ''dlm_controld'' | ||

| + | Many distributed/cluster applications use a dlm for inter-process synchronization where processes may live on different machines. GFS and CLVM are two examples. See [http://en.wikipedia.org/wiki/Distributed_lock_manager wikipedia DLM article] | ||

| + | ==== gfs_controld ==== | ||

| + | gfs_controld - daemon that manages mounting, unmounting, recovery and posix locks<br/> | ||

| + | Mounting, unmounting and node failure are the main cluster events that gfs_controld conrols. It also manages the assignment of journals to different nodes. The mount.gfs and umount.gfs programs communicate with gfs_controld to join/leave the mount group and receive the necessary options for the kernel mount. | ||

| + | === clvmd - cluster LVM daemon === | ||

| + | clvmd is the daemon that distributes LVM metadata updates around a cluster. It must be running on all nodes in the cluster and will give an error if a node in the cluster does not have this daemon running. | ||

| + | |||

| + | === aisexec - The actual kernel manager. === | ||

| + | Communicates among the nodes using a token | ||

| + | |||

| + | === Config of CentOS Cluster node === | ||

| + | <pre> | ||

| + | chkconfig --level 2345 ricci on | ||

| + | chkconfig --level 2345 luci on | ||

| + | chkconfig --level 2345 rgmanager on | ||

| + | chkconfig --level 2345 gfs on | ||

| + | chkconfig --level 2345 clvmd on | ||

| + | #chkconfig --level 2345 fenced on | ||

| + | chkconfig --level 2345 cman on | ||

| + | #chkconfig --level 2345 ccsd on | ||

| + | service ricci start | ||

| + | service luci stop | ||

| + | luci_admin init | ||

| + | service luci restart | ||

| + | service rgmanager start | ||

| + | service gfs start | ||

| + | service clvmd start | ||

| + | service fenced start | ||

| + | service cman start | ||

| + | </pre> | ||

| + | |||

| + | ==utilities== | ||

| + | *clustat Cluster Status | ||

| + | *cman_tool | ||

| + | *ccs_tool | ||

| + | *group_tool | ||

| + | *gfs_tool | ||

| + | *fence_tool | ||

| + | *dlm_tool | ||

| + | *lvs | ||

| + | *clusvcadm - Cluster User Service Administration Utility | ||

| + | *luci_admin | ||

| + | |||

| + | ==Files== | ||

| + | */etc/cluster/cluster.conf | ||

| + | hostnames | ||

| + | give all the nodes hostnames in /etc/hosts or use dns | ||

| + | *node1.tekkom.dk | ||

| + | *node2.tekkom.dk | ||

| + | *.. | ||

| + | <pre> | ||

| + | hostname node1.tekkom.dk | ||

| + | vi /etc/sysconfig/network | ||

| + | service network restart | ||

| + | </pre> | ||

| + | |||

| + | == examples == | ||

| + | *''clustat'' - Cluster status | ||

| + | <pre> | ||

| + | [root@node1 ~]# clustat | ||

| + | Cluster Status for webcluster2 @ Sun Apr 5 07:36:43 2009 | ||

| + | Member Status: Quorate | ||

| + | |||

| + | Member Name ID Status | ||

| + | ------ ---- ---- ------ | ||

| + | node1.tekkom.dk 1 Online, Local, rgmanager | ||

| + | node2.tekkom.dk 2 Online, rgmanager | ||

| + | node3.tekkom.dk 3 Online, rgmanager | ||

| + | |||

| + | Service Name Owner (Last) State | ||

| + | ------- ---- ----- ------ ----- | ||

| + | service:apache_service node2.tekkom.dk started | ||

| + | </pre> | ||

| + | *''cman_tool status'' | ||

| + | <pre> | ||

| + | [root@node1 cluster]# cman_tool status | ||

| + | Version: 6.1.0 | ||

| + | Config Version: 16 | ||

| + | Cluster Name: webcluster2 | ||

| + | Cluster Id: 28826 | ||

| + | Cluster Member: Yes | ||

| + | Cluster Generation: 252 | ||

| + | Membership state: Cluster-Member | ||

| + | Nodes: 3 | ||

| + | Expected votes: 3 | ||

| + | Total votes: 3 | ||

| + | Quorum: 2 | ||

| + | Active subsystems: 9 | ||

| + | Flags: Dirty | ||

| + | Ports Bound: 0 11 177 | ||

| + | Node name: node3.tekkom.dk | ||

| + | Node ID: 3 | ||

| + | Multicast addresses: 239.192.112.11 | ||

| + | Node addresses: 192.168.138.157 | ||

| + | </pre> | ||

| + | *''/etc/cluster/cluster.conf'' example | ||

| + | <pre> | ||

| + | <?xml version="1.0"?> | ||

| + | <cluster alias="webcluster2" config_version="16" name="webcluster2"> | ||

| + | <fence_daemon clean_start="0" post_fail_delay="0" post_join_delay="3"/> | ||

| + | <clusternodes> | ||

| + | <clusternode name="node1.tekkom.dk" nodeid="1" votes="1"> | ||

| + | <fence/> | ||

| + | </clusternode> | ||

| + | <clusternode name="node2.tekkom.dk" nodeid="2" votes="1"> | ||

| + | <fence/> | ||

| + | </clusternode> | ||

| + | <clusternode name="node3.tekkom.dk" nodeid="3" votes="1"> | ||

| + | <fence/> | ||

| + | </clusternode> | ||

| + | </clusternodes> | ||

| + | <cman/> | ||

| + | <fencedevices/> | ||

| + | <rm> | ||

| + | <failoverdomains> | ||

| + | <failoverdomain name="webcluster-failover" nofailback="0" ordered="0" restricted="1"> | ||

| + | <failoverdomainnode name="node2.tekkom.dk" priority="1"/> | ||

| + | </failoverdomain> | ||

| + | </failoverdomains> | ||

| + | <resources> | ||

| + | <apache config_file="conf/httpd.conf" name="Appache2" server_root="/mnt/iscsi" shutdown_wait="0"/> | ||

| + | <script file="/etc/rc.d/init.d/httpd" name="apachescript"/> | ||

| + | </resources> | ||

| + | <service autostart="1" domain="webcluster-failover" exclusive="0" name="apache_service"/> | ||

| + | </rm> | ||

| + | </cluster> | ||

| + | |||

| + | </pre> | ||

| + | |||

| + | = Configuration of services = | ||

| + | ==Virtual IP== | ||

| + | Add a resource ''IP Address'' | ||

| + | Add a service and select ''add a resource'' select the IP Address Resource. | ||

| + | |||

| − | == | + | = Manuls = |

| − | + | == Clustering == | |

| + | *[http://mars.tekkom.dk/sw/RedHat52/Cluster_Administration.pdf RedHat 5.2 Cluster Administration] | ||

| + | *[http://mars.tekkom.dk/sw/RedHat52/Cluster_Suite_Overview.pdf Redhat 5.2 Cluster Suite Overview] | ||

| + | *[http://mars.tekkom.dk/sw/RedHat52/Deployment_Guide.pdf RedHat 5.2 Deployment Guide] | ||

| + | *[http://mars.tekkom.dk/sw/RedHat52/DM_Multipath.pdf Redhat 5.2 Using Device Mapper - Configuring and administration] | ||

| + | *[http://mars.tekkom.dk/sw/RedHat52/Global_File_System.pdf RedHat 5.2 GFS Global File System] | ||

| + | *[http://mars.tekkom.dk/sw/RedHat52/Global_Network_Block_Device.pdf GNBD Global Network Block Device - Using with GFS] | ||

| + | *[http://mars.tekkom.dk/sw/RedHat52/IBM%20Redbook%20SAN%20Survival%20Guide.pdf SAN Survial Guide] | ||

| + | *[http://mars.tekkom.dk/sw/RedHat52/Cluster_Logical_Volume_Manager.pdf RedHat 5.2 Cluster LVM (Logical Volume Manager)] | ||

| + | *[[media:nfscookbook.pdf|nfscookbook]] Good example of setup with great explanations (Loads PDF file) | ||

| + | *[[media:aiscman.pdf |aiscman tutorial]] (Loads PDF file) | ||

| + | *[http://mars.tekkom.dk/sw/RedHat52/FreeNAS-SUG.pdf FreeNAS] Network Attached Storage solution | ||

| + | == FAQ's == | ||

| + | *[http://sources.redhat.com/cluster/wiki/FAQ RedHAT Cluster wiki FAQ] | ||

| + | == Backup technologies == | ||

| + | *[http://mars.tekkom.dk/sw/RedHat52/IBM%20Redbook%20product%20and%20Backup%20and%20recovery.pdf IBM Backup and recovery] | ||

| + | |||

| + | [[Category:Linux]][[Category:cluster]][[Category:CentOS]] | ||

Latest revision as of 13:29, 8 November 2009

Contents

- 1 Which services are available with RedHAT/Centos Clustering

- 2 Abbreviations and systems

- 3 Red Hat Cluster Suite Introduction

- 4 Cluster management with Conga

- 5 Cluster management from the console

- 6 cluster.conf configuration file

- 7 notes

- 8 Configuration of services

- 9 Manuls

Which services are available with RedHAT/Centos Clustering

High availability

- rgmanager (Relocates services from one node to another node in case of malfunction)

Abbreviations and systems

| System | Meaning | Runs on |

|---|---|---|

| CCS | Cluster Configuration System | Each node |

| CLVM | Cluster Logical Volume Manager. Provides volume management to nodes. | Each node |

| CMAN | Cluster Manager | Each node |

| DLM | Distributed Lock Manager | Each node |

| fenced | Fence Daemon - The DLM Distributed Lock manager daemon | Each node |

| GFS | Global File System. Shared storage among nodes. | Each node |

| GNDB | Global Network Block Device. Low level storage access over Ethernet | GFS server |

| LVS | Linux Virtual Server, routing software to provide IP load balancing | On two or more Linux gateways |

| RHCS | RedHat Cluster Suite - Software components to build various types of Clusters |

Quorum

- Quorum: The minimum number of people in a organization to conduct business or in this case the minimum number of resources available to keep the cluster running.

- In Redhat/Centos Clustering Quorum is when more than 50% of the votes are available. In a standard setting each node of a cluster have one vote. If you Cluster consists of eight nodes, the Cluster will first be available when five nodes are tied together in the Cluster. See the current operational status with cman_tool status command.

- This rule is necessary for example in the case we have eight nodes and a network error cuts the cluster in half, we would suddenly have two clusters with four nodes. The two Clusters would destroy the integrity of databases and storage.

Red Hat Cluster Suite Introduction

Red Hat Cluster Suite (RHCS) is an integrated set of software components that can be deployed in a variety of configurations to suit your needs for performance, high-availability, load balancing, scalability, file sharing, and economy.

RHCS consists of the following major components

- Cluster infrastructure — Provides fundamental functions for nodes to work together as a cluster: configuration-file management, membership management, lock management, and fencing.

- High-availability Service Management — Provides failover of services from one cluster node to another in case a node becomes inoperative.

- Cluster administration tools — Configuration and management tools for setting up, configuring, and managing a Red Hat cluster. The tools are for use with the Cluster Chapter Infrastructure components, the High-availability and Service Management components, and storage.

- Linux Virtual Server (LVS) — Routing software that provides IP-Load-balancing. LVS runs in a pair of redundant servers that distributes client requests evenly to real servers that are behind the LVS servers.

Additional Cluster Components

You can supplement Red Hat Cluster Suite with the following components, which are part of an optional package (and not part of Red Hat Cluster Suite):

- Red Hat GFS (Global File System) — Provides a cluster file system for use with Red Hat Cluster Suite. GFS allows multiple nodes to share storage at a block level as if the storage were connected locally to each cluster node.

- Cluster Logical Volume Manager (CLVM) — Provides volume management of cluster storage.

- Global Network Block Device (GNBD) — An ancillary component of GFS that exports block-level storage to Ethernet. This is an economical way to make block-level storage available to Red Hat GFS.

Cluster management with Conga

Starting luci and ricci

Follow the instructions in chapter 3 - Configuring Red Hat Cluster With Conga - in RedHat 5.2 Cluster Administration manual.

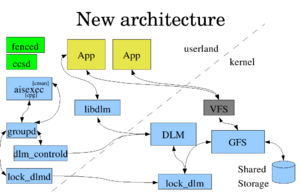

Cluster management from the console

Cluster subsystems

There are quite a few subsystems and daemons. See the picture below

cman - Cluster Manager

cman consists in CMAN version 2 of OpenAIS See the aiscman tutorial

- aisexec - The actual kernel manager. Communicates among the nodes using a token

- cman_tool - Cluster Management Tool (join,leave,status etc.)

- The service cman status starts the cluster manager services.

ccs - Cluster Configuration System

- ccsd - daemon that manages the cluster.conf file in a cman cluster.

- ccs_tool

clvmd - Cluster LVM daemon

clvmd is the daemon that distributes LVM metadata updates around a cluster. It must be running on all nodes in the cluster and will give an error if a node in the cluster does not have this daemon running.

cluster.conf configuration file

See CentOS Cluster Configurationarticle

notes

Services

ricci

ccsd - Cluster Configuration System Daemon

luci

cman

rgmanager - Resource Group Manager

The rgmanager manages and provides failover capabilities for collections of resources called services, resource groups, or resource trees in a cluster. See RedHAT rgmanager

Remember to enable this service

chkconfig --level 345 rgmanager on service rgmanager start

This manager actually runs the clurgmgrd (Cluster Resource Group Manager Daemon)

groupd

groupd - the group manager for fenced, dlm_controld and gfs_controld

- use the group_tool command to see status.

fenced

The fencing daemon, fenced, fences cluster nodes that have failed. Fencing a node generally means rebooting it or otherwise preventing it from writing to storage, for example disabling it's port on a SAN switch.

DLM - Distributed Lock Manager

- The daemon dlm_controld

Many distributed/cluster applications use a dlm for inter-process synchronization where processes may live on different machines. GFS and CLVM are two examples. See wikipedia DLM article

gfs_controld

gfs_controld - daemon that manages mounting, unmounting, recovery and posix locks

Mounting, unmounting and node failure are the main cluster events that gfs_controld conrols. It also manages the assignment of journals to different nodes. The mount.gfs and umount.gfs programs communicate with gfs_controld to join/leave the mount group and receive the necessary options for the kernel mount.

clvmd - cluster LVM daemon

clvmd is the daemon that distributes LVM metadata updates around a cluster. It must be running on all nodes in the cluster and will give an error if a node in the cluster does not have this daemon running.

aisexec - The actual kernel manager.

Communicates among the nodes using a token

Config of CentOS Cluster node

chkconfig --level 2345 ricci on chkconfig --level 2345 luci on chkconfig --level 2345 rgmanager on chkconfig --level 2345 gfs on chkconfig --level 2345 clvmd on #chkconfig --level 2345 fenced on chkconfig --level 2345 cman on #chkconfig --level 2345 ccsd on service ricci start service luci stop luci_admin init service luci restart service rgmanager start service gfs start service clvmd start service fenced start service cman start

utilities

- clustat Cluster Status

- cman_tool

- ccs_tool

- group_tool

- gfs_tool

- fence_tool

- dlm_tool

- lvs

- clusvcadm - Cluster User Service Administration Utility

- luci_admin

Files

- /etc/cluster/cluster.conf

hostnames give all the nodes hostnames in /etc/hosts or use dns

- node1.tekkom.dk

- node2.tekkom.dk

- ..

hostname node1.tekkom.dk vi /etc/sysconfig/network service network restart

examples

- clustat - Cluster status

[root@node1 ~]# clustat Cluster Status for webcluster2 @ Sun Apr 5 07:36:43 2009 Member Status: Quorate Member Name ID Status ------ ---- ---- ------ node1.tekkom.dk 1 Online, Local, rgmanager node2.tekkom.dk 2 Online, rgmanager node3.tekkom.dk 3 Online, rgmanager Service Name Owner (Last) State ------- ---- ----- ------ ----- service:apache_service node2.tekkom.dk started

- cman_tool status

[root@node1 cluster]# cman_tool status Version: 6.1.0 Config Version: 16 Cluster Name: webcluster2 Cluster Id: 28826 Cluster Member: Yes Cluster Generation: 252 Membership state: Cluster-Member Nodes: 3 Expected votes: 3 Total votes: 3 Quorum: 2 Active subsystems: 9 Flags: Dirty Ports Bound: 0 11 177 Node name: node3.tekkom.dk Node ID: 3 Multicast addresses: 239.192.112.11 Node addresses: 192.168.138.157

- /etc/cluster/cluster.conf example

<?xml version="1.0"?>

<cluster alias="webcluster2" config_version="16" name="webcluster2">

<fence_daemon clean_start="0" post_fail_delay="0" post_join_delay="3"/>

<clusternodes>

<clusternode name="node1.tekkom.dk" nodeid="1" votes="1">

<fence/>

</clusternode>

<clusternode name="node2.tekkom.dk" nodeid="2" votes="1">

<fence/>

</clusternode>

<clusternode name="node3.tekkom.dk" nodeid="3" votes="1">

<fence/>

</clusternode>

</clusternodes>

<cman/>

<fencedevices/>

<rm>

<failoverdomains>

<failoverdomain name="webcluster-failover" nofailback="0" ordered="0" restricted="1">

<failoverdomainnode name="node2.tekkom.dk" priority="1"/>

</failoverdomain>

</failoverdomains>

<resources>

<apache config_file="conf/httpd.conf" name="Appache2" server_root="/mnt/iscsi" shutdown_wait="0"/>

<script file="/etc/rc.d/init.d/httpd" name="apachescript"/>

</resources>

<service autostart="1" domain="webcluster-failover" exclusive="0" name="apache_service"/>

</rm>

</cluster>

Configuration of services

Virtual IP

Add a resource IP Address Add a service and select add a resource select the IP Address Resource.

Manuls

Clustering

- RedHat 5.2 Cluster Administration

- Redhat 5.2 Cluster Suite Overview

- RedHat 5.2 Deployment Guide

- Redhat 5.2 Using Device Mapper - Configuring and administration

- RedHat 5.2 GFS Global File System

- GNBD Global Network Block Device - Using with GFS

- SAN Survial Guide

- RedHat 5.2 Cluster LVM (Logical Volume Manager)

- nfscookbook Good example of setup with great explanations (Loads PDF file)

- aiscman tutorial (Loads PDF file)

- FreeNAS Network Attached Storage solution